Computational lens unmasks hidden 3D information from a single 2D micrograph

May 29, 2024National University of Singapore (NUS) physicists have developed a computational imaging technique to extract three-dimensional (3D) information from a single two-dimensional (2D) electron micrograph. This method can be readily implemented in most transmission electron microscopes (TEMs), rendering it a viable tool for rapidly imaging large areas at a nano-scale 3D resolution (approximately 10 nm).

Understanding structure-function relationships is crucial for nanotechnology research, including fabricating complex 3D nanostructures, observing nanometre-scale reactions, and examining self-assembled 3D nanostructures in nature. However, most structural insights are currently limited to 2D. This is because rapid, easily accessible 3D imaging tools at the nano-scale are absent and require specialised instrumentation or large facilities like synchrotrons. A research team at NUS addressed this challenge by devising a computational scheme that utilises the physics of electron-matter interaction and known material priors to determine the depth and thickness of the specimen’s local region. Similar to how a pop-up book turns flat pages into three-dimensional scenes, this method uses local depth and thickness values to create a 3D reconstruction of the specimen that can provide unprecedented structural insights.

Led by Assistant Professor N. Duane LOH from the Departments of Physics and Biological Sciences at NUS, the research team found that the speckles in a TEM micrograph contain information about the depth of the specimen. They explained the mathematics behind why local defocus values from a TEM micrograph point to the specimen’s centre of mass. The derived equation indicates that a single 2D micrograph has a limited capacity to convey 3D information. Therefore, if the specimen is thicker, it becomes more difficult to accurately determine its depth.

The authors improved their method to show that this pop-out metrology technique can be applied simultaneously on multiple specimen layers with some additional priors. This advancement opens the door to rapid 3D imaging of complex, multi-layered samples.

The research findings were published in the journal Communications Physics.

This research continues the team’s ongoing integration of machine learning with electron microscopy to create computational lenses for imaging invisible dynamics that occur at the nano-scale level.

Dr Deepan Balakrishnan, the first author, said, “Our work shows the theoretical framework for single-shot 3D imaging with TEMs. We are developing a generalised method using physics-based machine learning models that learn material priors and provide 3D relief for any 2D projection.”

The team also envisions further generalising the formulation of pop-out metrology beyond TEMs to any coherent imaging system for optically thick samples (i.e., X-rays, electrons, visible light photons, etc.).

Prof Loh added, “Like human vision, inferring 3D information from a 2D image requires context. Pop-out is similar, but the context comes from the material we focus on and our understanding of how photons and electrons interact with them.”

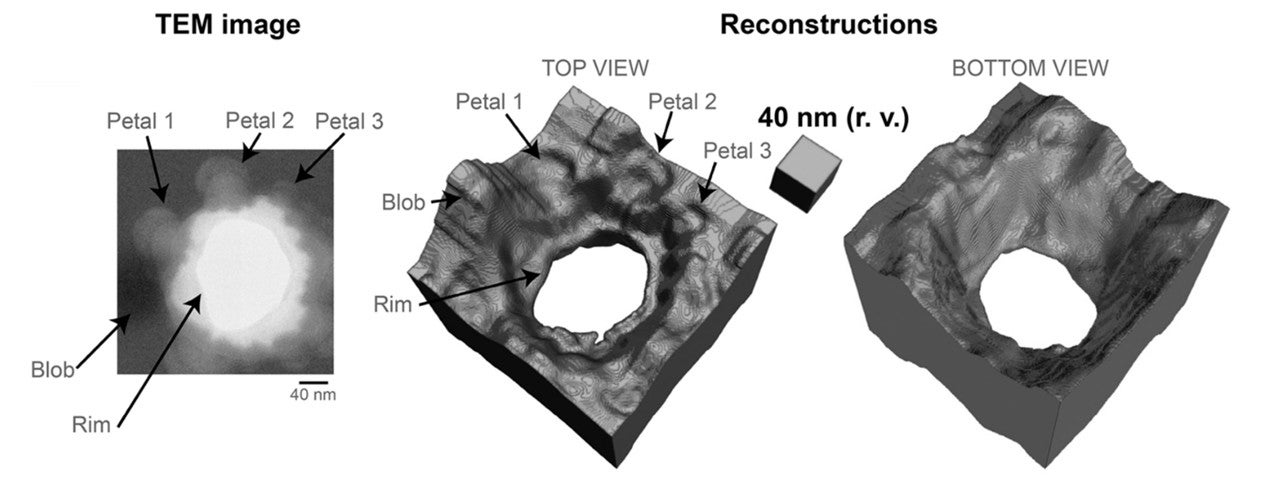

Figure shows from left to right: An energy-filtered transmission electron microscopy image of a specimen with features on either side, including a nano-pit etched through an amorphous silicon nitride (SiNx) membrane; the top view of the 3D reconstruction that shows etching artifacts such as the rim, petals and a debris blob; the bottom view shows the widening of the nano-pit opening towards the bottom surface. [Credit: Communications Physics]

References

[1] D. Balakrishnan*, S.W. Chee, Z. Baraissov, M. Bosman, U. Mirsaidov, N.D. Loh*, “Single-shot, coherent, pop-out 3D metrology” Communications Physics Volume: 6 Issue: 1 DOI: 10.1038/s42005-023-01431-6 Published: 2023.

[2] D. Balakrishnan*, S.W. Chee, M. Bosman, N.D. Loh, “TEM-based single-shot coherent 3D imaging” Adaptive Optics and Applications, JW5A. 3, DOI: 10.1364/3D.2022.JW5A.3 Published: 2022.